Advanced Machine Learning

18: Algorithmic Differentiation

Outline for the lecture

-

Algorithmic differentiation

-

Forward mode AD

-

Backpropagation

-

Reverse mode AD

Algorithmic Differentiation (AD)

".. may be one of the best scientific computing techniques you’ve never heard of."

Alexey Radul

The very first computer science PhD dissertation introduced forward accumulation mode automatic differentiation.

Wengert (1964)

Robert Edwin Wengert. A simple automatic derivative evaluation program. Communications of the ACM 7(8):463–4, Aug 1964.

A procedure for automatic evaluation of total/partial derivatives of arbitrary algebraic functions is presented. The technique permits computation of numerical values of derivatives without developing analytical expressions for the derivatives. The key to the method is the decomposition of the given function, by introduction of intermediate variables, into a series of elementary functional steps. A library of elementary function subroutines is provided for the automatic evaluation and differentiation of these new variables. The final step in this process produces the desired function’s derivative. The main feature of this approach is its simplicity. It can be used as a quick-reaction tool where the derivation of analytical derivatives is laborious and also as a debugging tool for programs which contain derivatives.

R. E. Bellman, H. Kagiwada, and R. E. Kalaba (1965) Wengert’s numerical method for partial derivatives, orbit determination and quasilinearization, Communications of the ACM 8(4):231–2, April 1965, doi:10.1145/363831.364886

In a recent article in the Communications of the ACM, R. Wengert suggested a technique for machine evaluation of the partial derivatives of a function given in analytical form. In solving nonlinear boundary-value problems using quasilinearization many partial derivatives must be formed analytically and then evaluated numerically. Wengert’s method appears very attractive from the programming viewpoint and permits the treatment of large systems of differential equations which might not otherwise be undertaken.

Automatic Differentiation (AD) mechanically calculates the derivatives (Leibnitz, 1664; Newton, 1704) of functions expressed as computer programs, at machine precision, and with complexity guarantees.

Automatic Differentiation

- Derivative of $f: \RR^n \to \RR^m$ is $m\times n$ "Jacobian matrix" $\bm{J}$.

- AD in the forward accumulation mode: $\bm{J}v$ (Wengert, 1964)

- AD in the reverse accumulation mode, $\bm{J}^Tv$ (Speelpenning, 1980)

- About a zillion other modes and tricks

- Vibrant field with regular workshops, conferences, updated community portal (http://autodiff.org)

What is AD?

- Automatic Differentiation

- aka Algorithmic Differentiation

- aka Computational Differentiation

- aka Algorithmic Differentiation

- AD Type I

- A calculus for efficiently calculating derivatives of functions specified by a set of equations.

- AD Type II

- A way of transforming a computer program implementing a numeric function to also efficiently calculate some derivatives.

- AD Type III

- A computer program which automatically transforms an input computer program specifying a numeric function into one that also efficiently calculates derivatives.

AD is not Numerical Differentiation

Numerical diffirentiation is a problematic approximation

- though shalt not add small numbers to big numbers

- though shalt not subtract numbers which are approximately equal

What is wrong with Numerical Differentiation

AD is not Symbolic Differentiation

\begin{align} f(\vec{x}) & = \prod_{i=1}^d x_i \\ \nabla f(\vec{x}) & = \left(\frac{\partial f}{\partial x_1}, \frac{\partial f}{\partial x_2}, \dots, \frac{\partial f}{\partial x_d},\right) = \left(\begin{array}{ccccc} x_2x_3x_4\cdots x_{d-1}x_d, \\ x_1x_3x_4\cdots x_{d-1}x_d, \\ x_1x_2x_4\cdots x_{d-1}x_d, \\ \vdots \\ x_1x_2x_3\cdots x_{d-1} \\ \end{array}\right) \end{align}

Example from Speelpenning, figure modified from Baydin

For an alternative opinion see: On the Equivalence of Forward Mode Automatic Differentiation and Symbolic Differentiation

For an alternative opinion see: On the Equivalence of Forward Mode Automatic Differentiation and Symbolic Differentiation

We want exact derivative at a point!

Forward Mode AD

AD is

Figure modified from Baydin

AD is

Figure from Baydin

AD graph

$$ y = f(x_1, x_2) = \ln(x_1) + x_1x_2 - \sin(x_2) $$AD trace

AD

AD: a Jacobian column in a pass

AD: directional derivative

Dual numbers (1873)

\begin{align} v + \dot{v}\epsilon &\\ v, \dot{v} \in \RR, & \epsilon \ne 0, \epsilon^2 = 0 \\ (v + \dot{v}\epsilon) + (u + \dot{u}\epsilon) & = (v + u) + (\dot{v} + \dot{u})\epsilon\\ (v + \dot{v}\epsilon)(u + \dot{u}\epsilon) & = (vu) + (v\dot{u} + u\dot{v})\epsilon\\ f(v+\dot{v}\epsilon) & = f(v) + f^{\prime}(v)\dot{v}\epsilon \end{align}

Clifford (1873)

Back propagation

the classical presentation

Figure from Baydin

Reverse Mode AD

In the 1970s, tools for automated generation of adjoint codes (aka reverse accumulation mode automatic differentiation, aka backpropagation) were developed.

- Type I

- Geniuses transforming mathematical systems (Gauss; Feynman (1939); Rozonoer and Pontryagin (1959))

- Type II

- Manual transformation of computational processes (Bryson (1962); Werbos (1974); Le Cun (1985); Rumelhart et al. (1986))

- Type III

- Computer programs transform other computer programs (Speelpenning (1980); LUSH; TAPENADE)

- Type IV

- First-class AD operators; closure (STALIN$\nabla$; R$^6$RS-AD; AUTOGRAD; DIFFSHARP)

Bert Speelpenning

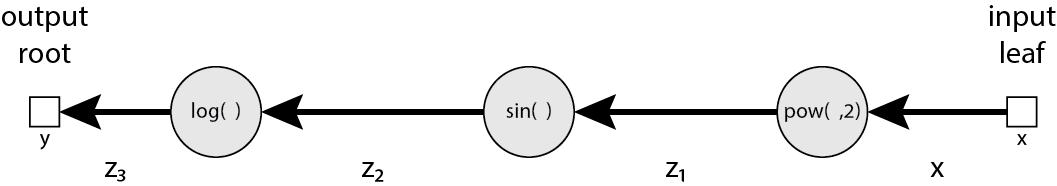

\begin{align} y &= \log(\sin(x^2)) \end{align}

- Traces:

- Primal

- Tangent Derivative

- Cotangent Derivative

- Direction:

- $\rightarrow$ Forward

- $\rightarrow$ Forward

- $\leftarrow$ Reverse

Computation Graph

- Following precedence rules

- binary/n-ary operators allowed $\rightarrow$ DAG (tree)

- $y =$ root, $x =$ leaves

Intermediate Variables: $z_i$

\begin{align} x &\\ z_1 &= x^2\\ z_2 &= \sin(z_1)\\ z_3 &= \log(z_2)\\ y &= z_3\\ \end{align}

Adjoint: $\bar{z_i} = \frac{\partial y}{\partial z_i}$

Example

\begin{align} x &\\ z_1 &= x^2\\ z_2 &= \sin(z_1)\\ z_3 &= \log(z_2)\\ y &= z_3\\ \end{align}

- $\bar{x} = \left(\left(\frac{\partial y}{\partial z_3}\frac{\partial z_3}{\partial z_2}\right) \frac{\partial z_2}{\partial l z_1}\right)\frac{\partial z_1}{\partial x}$

- $\bar{x} = \frac{\partial y}{\partial x} = \frac{\partial y}{\partial z_1}\frac{\partial z_1}{\partial x} = \bar{z}_1 2 x$

- $\bar{z}_1 = \frac{\partial y}{\partial z_1} = \frac{\partial y}{\partial z_2}\frac{\partial z_2}{\partial z_1} = \bar{z}_ 2\mathrm{cos}(z_1)$

- $\bar{z}_2 = \frac{\partial y}{\partial z_2} = \frac{\partial y}{\partial z_3}\frac{\partial z_3}{\partial z_2} = \bar{y}\ \frac{1}{z_2}$

- $\bar{z}_3 = \frac{\partial y}{\partial z_3} = \frac{\partial y}{\partial y} = \bar{y} = 1$ (seed)

\begin{align} \bar{x} & \fragment{6}{= \bar{z}_1 2 x}\\ &\fragment{7}{= (\bar{z}_2\mathrm{cos}(z_1) ) 2 x}\\ &\fragment{8}{= \left(\left(\bar{z}_3 \frac{1}{z_2} \right) \mathrm{cos}(x^2) \right) 2 x}\\ &\fragment{9}{= \left(\left( \frac{1}{\mathrm{sin}(z_1)} \right) \mathrm{cos}(x^2) \right) 2 x}\\ &\fragment{10}{= \left(\left( \frac{1}{\mathrm{sin}(x^2)} \right) \mathrm{cos}(x^2) \right) 2 x}\\ &\fragment{11}{= \left( \mathrm{cot}(x^2) \right) 2 x\ = 2 x ~\mathrm{tan} \left( \frac{\pi}{2} - x^2\right)}\\ \end{align}

References

- Appendix III of https://arxiv.org/pdf/1911.04048.pdf

- https://arxiv.org/pdf/1502.05767.pdf

- https://arxiv.org/pdf/1703.02311.pdf